SmartDQ

An enterprise B2B software product created for a Telecom Client that uses AI/ML to check and fix customer data automatically. It helps find errors, inconsistencies, and missing information in their customer records and corrects them, making the data more accurate and reliable.

99%

50%

Reduction in Manual Effort

Data Issues Identified

Feb 2019 - Oct 2019

Role: Associate Product Manager | Wipro Ltd.

Team Size: Team of 4

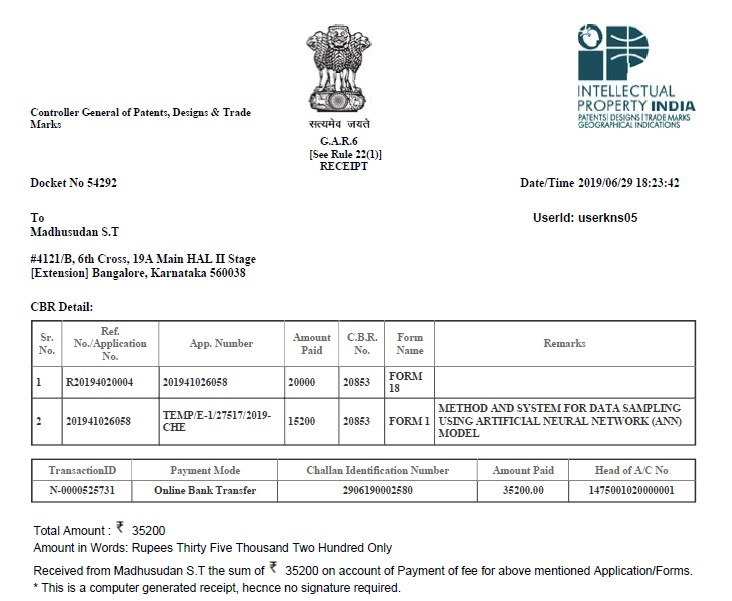

1 Patent

Filed for Unique Sampling Technique

Problem Statement

The client used a method called random sampling, where they checked a fixed percentage of their data to find problems. This method often failed to capture critical data quality issues, as it did not adapt to the dataset’s variability or prioritize high-impact areas, leading to persistent gaps and errors.

Manual quality checks were inadequate for the telecom’s vast and complex data, resulting in operational inefficiencies.

The rule-based remediation engines in use were rigid and struggled to address diverse data issues effectively, driving the need for an AI-based solution.

Product Opportunity

According to industry research, the global data quality tools market is expected to reach more than $5.5 billion by 2025. Wipro recognized a substantial market opportunity to leverage AI/ML-driven automated data quality solutions.

Solution

Using AI/ML to profile data by focusing on unusual patterns instead of random samples, adjusting the sample size based on data quality to find all important issues.

Repairing data problems automatically with smart workflows, making checks faster and more effective for Telecom company’s large and complex data.

My Role

As Product Manager, I owned the entire product lifecycle:

Led user research—planning, executing, and analyzing client interviews and card sorting exercises with stakeholders from sales, engineering, and data operations teams.

Authored all user epics, job stories, and acceptance criteria.

Facilitated feature prioritization workshops and translated user needs into a must-have roadmap.

Drove design sprints, QA, engineering stand-ups, and direct demos to the client.

Owned tracking and communication for KPIs and release milestones.

Product Development Approach

Discovery

Definition

Ideation

Prioritization

Wireframing and Prototyping

Delivery

User interviews

Requirement Gathering

User Stories

Acceptance Criteria

KPI definition

Brainstorming Sessions with the Team

MoSCoW Workshop with the client and project team

User Flow Sketches

Clickable Prototypes

Coordinated Sprints

Frequent Feedback Loops

Discovery

User Interviews

We conducted semi-structured interviews with 5+ stakeholders. Key things we hoped to learn:

What happens when there’s a data issue—how do they usually find out about it?

When was the last time a data problem affected customer billing or experience?

What were the areas of their process where they wished things moved more quickly?

How do they usually identify issues that might be difficult to spot manually?

What were the biggest challenges they faced when trying to resolve those data issues?

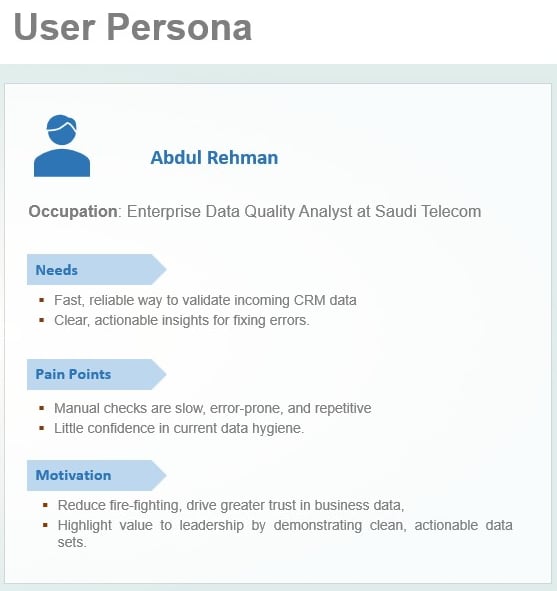

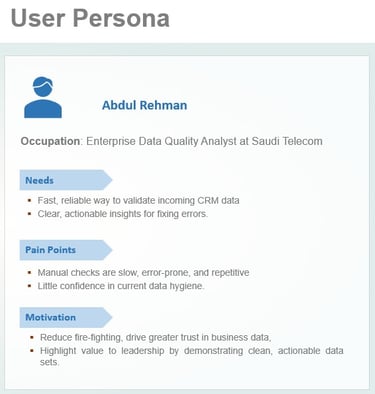

Definition

Here are the top use cases that I found during early customer calls. They highlighted the main challenges our solution should address.

Job Stories

I want the system to automatically profile the data and flag anomalies when I upload a new CRM dataset

So that I can prioritize my remediation efforts immediately.

Example: A data profile report highlighting crucial data quality issues in red, less crucial issues with yellow, and no issues with green.

I want to generate a representative sample from the entire dataset

So that I can check the overall health of the data and investigate the root cause if Data Quality Score drops by 10%

Example: Generate a data sample and determine a data health score by evaluating data consistency, completeness, accuracy, and validity.

I want step-by-step remediation options when I select a flagged data issue

So that I can resolve errors efficiently and audit changes made.

Example: Automatically generate remediation rules, imputation logic for missing data, and deduplication logic to fix data issues using AI.

I want a downloadable report showing before/after metrics when cleaning is complete

So that I can share documented improvements with my manager.

Example: An Export option to download Data Profile report, and cleansed dataset.

Key Performance Indicators (KPI Definition)

Percentage of data quality issues automatically detected and flagged

Reduction in manual/rule based remediation time (time to clean a batch of ~1,000,000 records)

% of CRM records remediated in under 2 hours

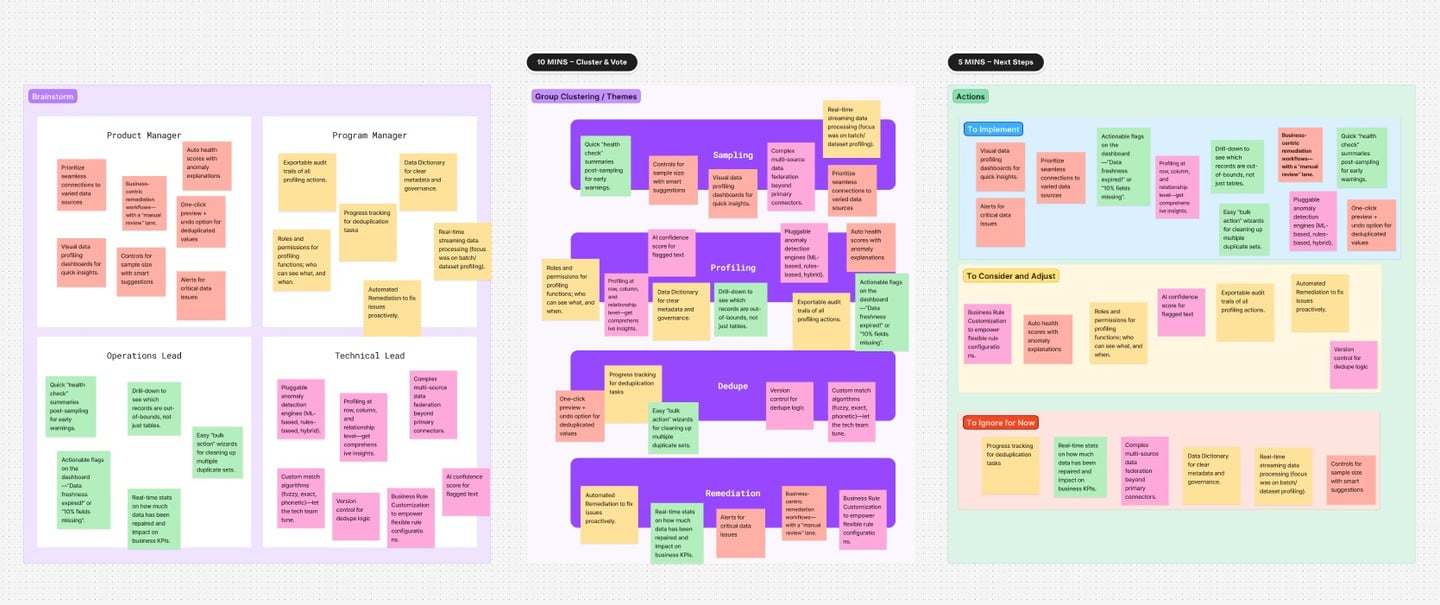

Ideation

I conducted brainstorming session with the team to come up with ideas. We kick things off by getting ideas from four different angles — Product Manager, Program Manager, Operations Lead, and Tech Lead — so we capture the full picture: what users want, what’s realistic to deliver, how it works in daily ops, and how to keep it technically sound. Those ideas are then grouped into SmartDQ’s core pillars: Sampling, Profiling, Deduplication, and Remediation. This makes sure we cover the whole data quality journey, from spotting problems to fixing them.

Some of the key ideas we came up with are listed below -

Pick smart samples that represent the whole data to understand quality better.

Build a system to find and remove duplicate text that learns and improves over time.

Use smart techniques to fill in missing numbers more accurately than just averages.

Automatically check data quality and give an overall health score when data is uploaded.

Suggest easy rules to fix poor-quality data based on patterns found.

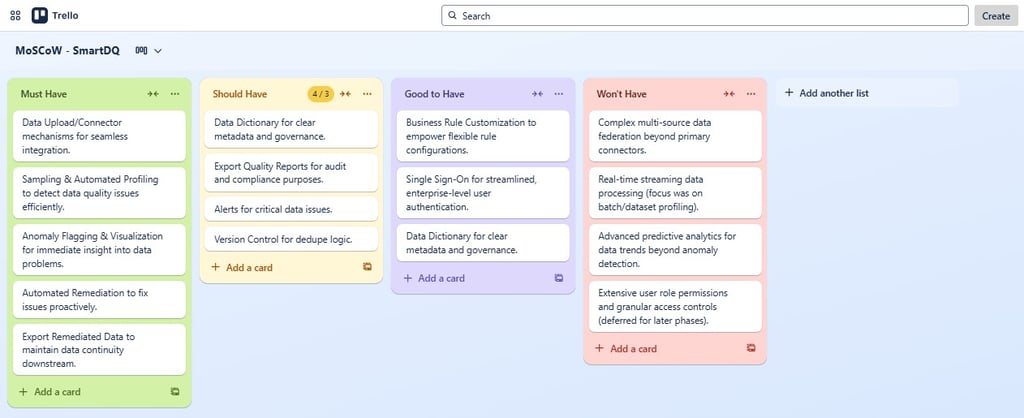

Prioritization - MoSCoW

To stay focused on delivering the most value fast, we turned our brainstormed ideas into a MoSCoW priority map, making it clear what to tackle first.

Patent Filing for Sampling Methodology

I developed a sequential prediction model powered by business-driven predictor variables, ensuring that sample datasets reflected the full range of potential data quality issues. This made remediation more targeted and significantly reduced the time spent on exhaustive checks. The approach was granted patents in the US and India in June 2019 and was later integrated into Wipro’s flagship data quality platform, benefiting multiple clients.

Wireframes: How the solution worked?

Delivery

Bringing SmartDQ to life meant expertly guiding the product from concept to reality:

Design & Prototyping: I sketched user flows and built clickable prototypes, iteratively refining screens for data onboarding, anomaly detection, and remediation based on hands-on feedback.

Agile Execution: I led design sprints, daily QA stand-ups, and coordinated closely with engineering to ensure rapid progress and resilient builds.

Stakeholder Engagement: Regular client demos and user testing sessions kept solution-fit and usability front-and-center, ensuring buy-in and course correction where needed.

Documentation & KPIs: I authored user stories, acceptance criteria, and defined clear KPIs to measure our impact.

Customer-Centric Ethos: By responding to user feedback and “hidden” frustrations, we centered empathy in our approach, delivering a solution that worked intuitively in the background, empowering users to focus on high-value work.

What Went Well

What Could Have Gone Better

Built for Real Needs: Close collaboration with the client from interviews to delivery revealed true pain points, like tedious manual data cleanup. We focused on creating a trusted, practical solution.

Empathy First: Listening beyond words showed users wanted SmartDQ to work quietly in the background—flagging and fixing issues so they could focus on meaningful work.

Clear & Collaborative: Frequent demos, rapid feedback, and transparent updates kept everyone aligned and excited as the product came to life.

Sharper Focus: A stricter “essentials-first” approach could’ve delivered value faster.

More Resources: Extra UI/UX and project management support would have sped progress and freed up time for deeper user engagement.

Smarter Tracking: More detailed metrics could have better showcased the product’s impact over time.

Smaller, Quicker Releases: More frequent updates would have allowed faster learning and user wins.

Outcomes

99% of data issues identified and addressed in project scope.

50% reduction in remediation cycle time.

Enhanced billing accuracy and business confidence in CRM data.

Patented sampling technique now core to Wipro’s data quality toolkit.

SmartDQ for the Telecom client moved the needle on enterprise data quality by directly translating user frustrations into a targeted, automated solution. Every artifact—from interviews and workflow sketches to prioritization grids and KPIs—fed into building a product that didn’t just flag issues, but helped users meaningfully fix them, measure progress, and showcase wins to leadership.